I’ve found that one of the most common failure points in a growing business happens silently. An automation misfires. A lead gets routed to the wrong person. A critical notification never gets sent.

When someone finally notices, the first question is always the same: “What did the system do, and why?” If the answer is a collective shrug, you don’t have a system; you have a black box.

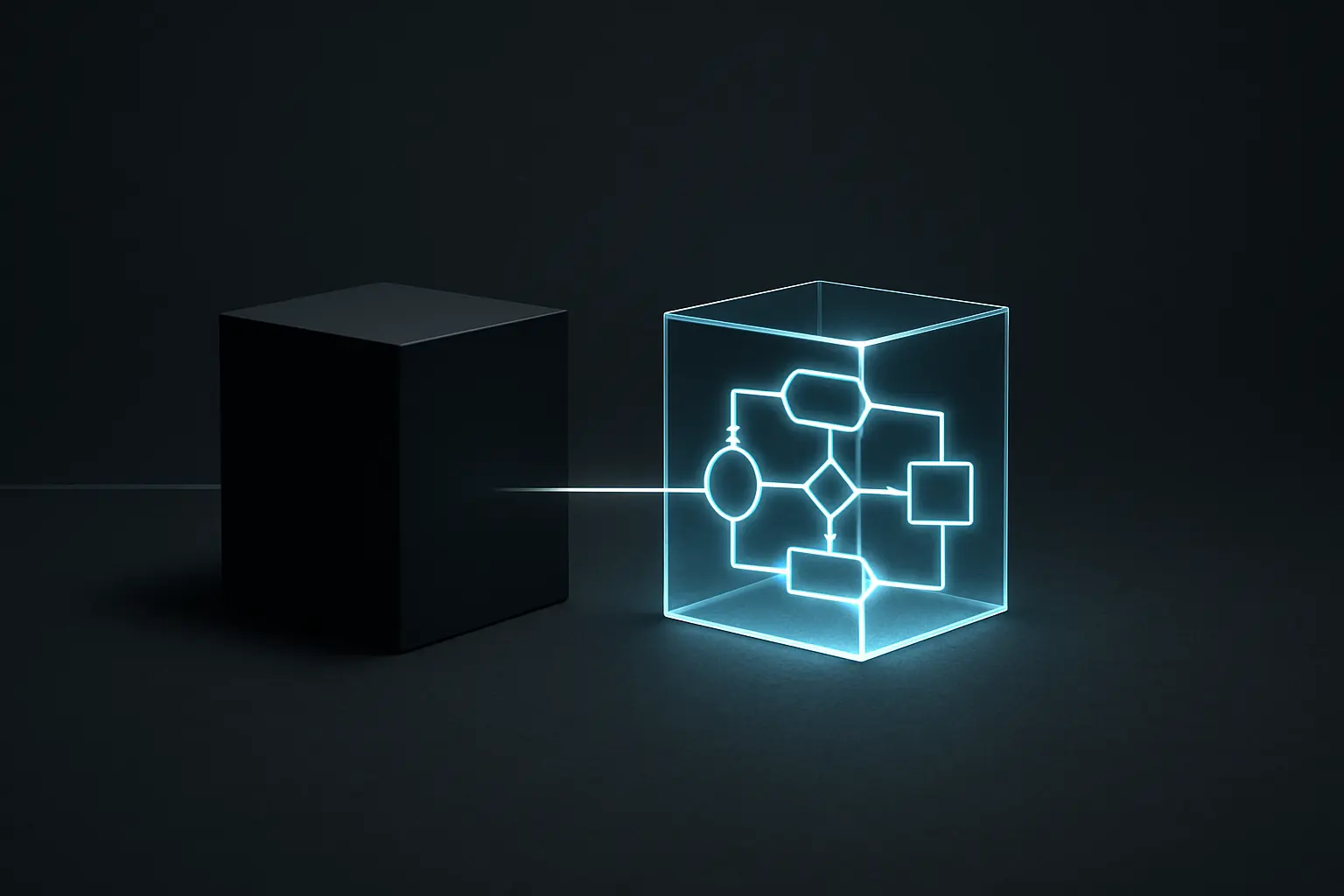

This is a problem I’ve been focused on lately. As we build more complex automated workflows at JvG Technology and for our marketing experiments at Mehrklicks, the risk of creating opaque, untrustworthy systems grows. For me, a core tenet of systems thinking is that a system you can’t understand is a system you can’t control, scale, or improve. We’ve adopted a simple but powerful principle: we don’t build black boxes. We build glass boxes.

The Hidden Cost of ‚Black Box‘ Automation

A ‚black box‘ system is one where you can see the inputs and outputs, but the decision-making process in between is a mystery. You put data in, something happens, and a result comes out—which works fine until it produces an unexpected result.

The problem isn’t just technical; it’s deeply human. When a process is opaque, it erodes trust. Research from Harvard Business Review confirms this: a lack of transparency leads directly to ‚mistrust among employees, customers, and partners, who may be skeptical of decisions they cannot understand.‘ If your team doesn’t trust the automation, they’ll create manual workarounds, defeating the entire purpose of the system.

This isn’t a niche issue. A McKinsey Global Institute report found that 60% of business leaders cite a lack of explainability as a major barrier to adopting AI and automation. When teams can’t interpret a system’s logic, they can’t effectively debug, improve, or rely on its outputs. That’s a direct cap on scalability.

Our Approach: The ‚Glass Box‘ Principle

A ‚glass box,‘ by contrast, is a system designed for transparency. The goal is for any team member, regardless of their technical expertise, to be able to look at a decision the system made and understand the ‚why‘ behind it. It’s about making the logic visible.

The difference is simple but profound. A black box gives you an answer. A glass box gives you the answer and shows its work.

This commitment to transparency is about building for effective human-machine collaboration, which, as MIT Sloan Management Review highlights, hinges on ‚interpretability’—the degree to which a person can understand the cause of a decision. Their research confirms what we’ve seen in practice: visual aids like flowcharts and decision trees dramatically improve both understanding and confidence in a system.

How We Build and Document Our Glass Boxes

Making a system transparent doesn’t happen by accident; it has to be a deliberate part of the design process. Here are a few practical rules we follow.

Rule 1: Document the ‚Why‘ Before the ‚How‘

Before we build a single step of an automation, we write a brief internal ‚Decision Memo.‘ It’s usually just a paragraph, but it answers three critical questions in plain English:

- Goal: What is the specific outcome we want to achieve?

- Trigger: What event or condition initiates this process?

- Key Logic: What are the primary rules that will guide the decision? (e.g., ‚If the lead source is ‚Website‘ and the budget is over €10k, assign to the senior sales team.‘)

This memo becomes the constitution for the automation. It forces clarity and ensures the business logic is sound before any code or modules are connected.

Rule 2: Visualize the Decision Flow

We map out every significant automation visually. For a project like automating our client onboarding, we created a flowchart that showed every conditional split, every API call, and every potential failure point. This visual map is invaluable, serving as living documentation that’s far easier to parse than lines of code or a complex web of connected modules.

Here’s a simplified look at what a segment of one of our automation logs in Make.com might show. Notice how you can trace the exact path the data took.

This visual proof is the core of the glass box. There’s no guessing. The path taken is recorded and clear for anyone to see.

Rule 3: Create Human-Readable Logs

An error log that just says Process Failed: Error 502 is a black box message. A glass box log says Process Failed: Could not connect to the CRM API. The connection timed out after 30 seconds.

We configure our systems to output descriptive logs. While this takes a little more effort upfront, the payoff is enormous. It transforms troubleshooting from a specialized technical task into a simple exercise in logic. Investing in good documentation here is one of the highest-leverage activities you can undertake for the long-term health of your systems.

The Real Payoff: Troubleshooting in Minutes, Not Days

The true value of the glass box approach becomes obvious when something breaks. With a transparent system, we can use simple but powerful frameworks like the ‚Five Whys‘ to find the root cause in minutes.

Imagine a lead from a new marketing campaign never got assigned to a salesperson. Here’s how that analysis looks with a glass box:

- Why wasn’t the lead assigned?

- The log shows the automation stopped at the ‚Assign Owner‘ step.

- Why did it stop there?

- The log says, ‚Error: Rule ‚EU Leads‘ returned no valid owner.‘

- Why did the rule return no owner?

- Looking at the rule’s logic, it assigns based on the ‚Country‘ field.

- Why did the ‚Country‘ field cause an issue?

- The input data shows the ‚Country‘ field for this lead was empty.

- Why was the field empty?

- The web form for the new campaign didn’t include a mandatory country field.

Root Cause: The web form was configured incorrectly. The automation worked perfectly according to its rules.

With a black box, this investigation could take days of guesswork. With a glass box, it took five questions and a few minutes of reading clear logs. This isn’t just about saving engineering headaches. Research from Accenture shows that companies practicing ‚Explainable AI‘ (XAI)—another term for this transparency—see a 15% higher ROI from their initiatives, driven by faster adoption, better compliance, and quicker error identification.

Frequently Asked Questions (FAQ)

What’s the difference between a ‚black box‘ and a ‚glass box‘?

A ‚black box‘ shows you the inputs and outputs but hides the internal process. A ‚glass box‘ is designed to make that internal process visible and understandable to anyone, helping build trust and simplify troubleshooting.

Isn’t this level of documentation a lot of extra work?

It requires more work upfront, but it saves multiples of that time down the line. The cost of a single critical system failure that takes days to diagnose is almost always higher than the cumulative time spent documenting your systems properly from the start.

What tools do you recommend for visualizing automation?

For high-level planning, tools like Miro, Whimsical, or even simple diagram software work well. For execution, many modern automation platforms like Make.com (formerly Integromat) or Zapier offer visual builders that serve as a form of documentation themselves. The key is to choose a tool that lets you see the flow of data.

Can this apply to simple automations, or just complex AI?

This principle is even more important for simple automations because they multiply so quickly within an organization. A dozen simple, undocumented ‚black box‘ Zaps can create far more chaos than one complex but well-documented AI model. The glass box approach scales from the simplest workflow to the most advanced system.

Moving from Opaque to Transparent

Building systems that you can trust is fundamental to scaling a business. That trust doesn’t come from a system being perfect; it comes from it being understandable. By shifting our perspective from building black boxes to architecting glass boxes, we create a resilient, scalable foundation where anyone on the team can feel confident in the tools they use.

A good first step is to pick one of your existing automations. If it were to fail right now, could you explain to a colleague exactly what went wrong and why? If not, you might have a black box on your hands. Making its logic visible is the first step toward building a more transparent and reliable system.